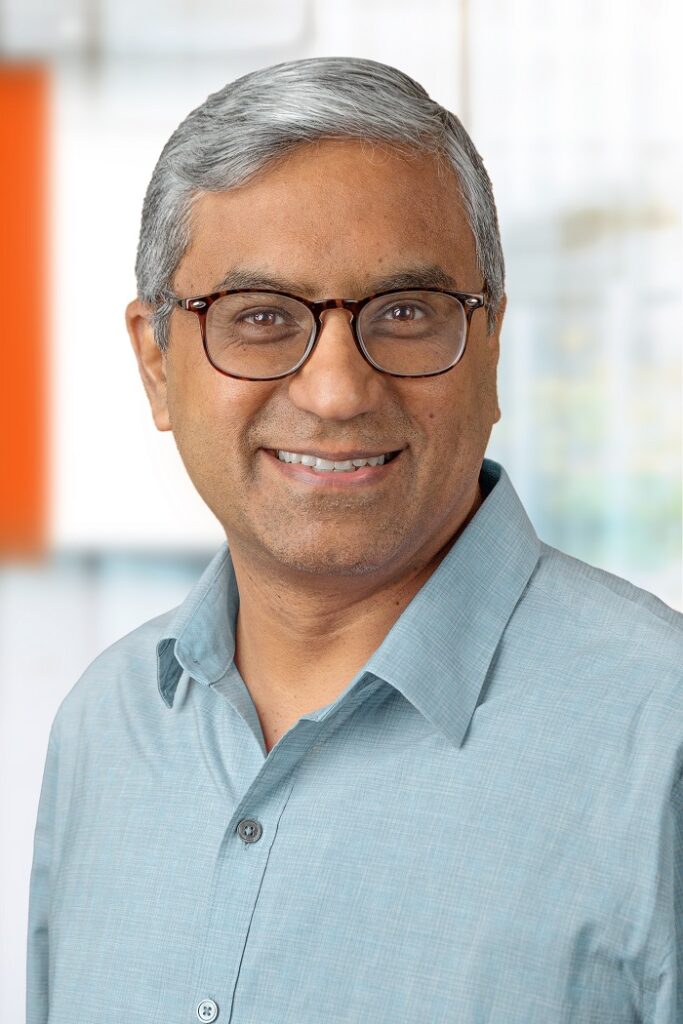

By SolarWinds Senior Vice President of Engineering Krishna Sai

Krishna Sai leads the engineering, technology and architecture teams at SolarWinds. He is a seasoned leader and entrepreneur with over two decades of experience scaling global engineering teams and building winning products across multiple industries. Sai has held leadership roles at Atlassian, Groupon, and Polycom, co-founded two technology companies, and holds several patents.

New data is being created and accumulated at a relentless pace. One recent estimate found a whopping 328.77 million terabytes of data are created each day. Managing this flood of data in all its forms is no easy task – but the potential business insights that can be gleaned from this data are enormous.

Government organizations have opened their eyes to the vast amounts of data they generate and the various ways this data could be leveraged to improve citizen services. Deep learning technology, which leverages artificial intelligence (AI) and machine learning (ML) technology to mimic human learning and decision-making, is a critical tool for uncovering data insights and driving innovation. For example, the Home Office could use deep learning technology to help streamline intricate tasks like the handling of visa and immigration applications, improving employee productivity, and accelerating visa decisions. Meanwhile, air traffic controllers could leverage deep learning to more accurately predict short-term congestion spikes at busy airports, helping improve the safety and efficiency of the national airspace system.

As government organizations explore ways to harness deep learning technology, database managers should start to prepare. Here are four key considerations for database management in this era of big data and deep learning.

Strengthen Database Foundations

The foundation of successful deep learning initiatives lies in high-quality, real-world data and consistently high-performing databases. While AI can process massive datasets at unprecedented speeds, government databases must be able to support the complex processing requirements needed to extract actionable insights.

To meet the growing demands of advanced AI algorithms and deep learning, government IT teams need to plan and execute effective strategies for their data and databases. This could include upgrading to more powerful and efficient databases equipped with unlimited throughput, scalable processing power, and zero latency.

Harness the Cloud

Database managers are increasingly turning to cloud-hosted database services to help manage the vast amounts of data government organizations generate and collect. Unlike on-premises data centers, cloud-based solutions are less constrained by operational and technical requirements. This means cloud-hosted databases can more quickly and easily scale to process growing amounts of data. When managed effectively, cloud-hosted databases can also help organizations optimize IT spend and improve performance across the technology stack.

But Don’t Rule Out On-Premises

However, factors like an uncertain economy, budget constraints, and high up-front migration costs are causing some public sector organizations to hold back on cloud investments. And that is a valid choice. The reality is not all database workloads perform optimally in the cloud, and migrating might introduce unnecessary complexities. Additionally, inflationary pressures have impacted some pay-as-you-go cloud models, making on-premises database solutions an attractive and reliable option.

Prioritize Observability, Regardless of Environment

Government CIOs must manage concerns around cost, productivity, and efficiency whether they prioritize cloud-hosted or on-premises databases. That’s where observability technology can make a difference.

By implementing observability, IT pros can get a comprehensive view of the IT environment and databases – no matter if they’re on-premises, in the cloud, or in a hybrid environment. Observability technology discovers and maps the data estate and provides health and daily performance metrics. Down-to-the-second data collection allows teams to uncover issues connected to an error or outage, accelerating root-cause analysis and remediation. With observability, database teams can help ensure the success of deep learning implementations, enabling the government to more effectively serve its citizens.

Recent Comments